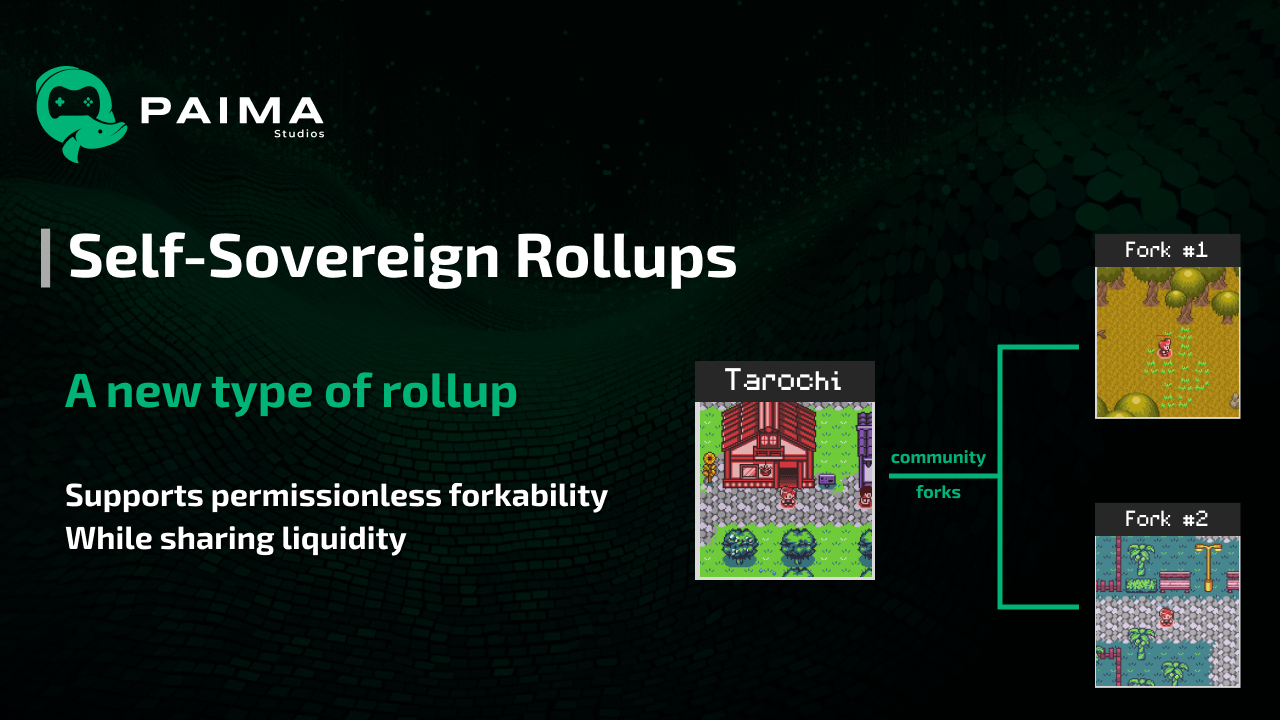

Decentralized games currently mostly rely on recycling infrastructure built for decentralized finance applications to bootstrap their development at a lower cost. However, because these are not purpose-built solutions, they also drag in a lot of unintended consequences. We propose a new type of rollup we call Self-Sovereign Rollups that are purpose-built for gaming use-cases to unlock the next frontier of possibilities.

Definitions

"Sovereignty" refers to the full right and power of a body to govern itself without any interference from outside sources or bodies. In the context of "self-sovereignty," the term is used to describe the concept that an individual has the right to govern themselves, possessing autonomy and control over their personal decisions, data, and privacy and assets. This concept is often discussed in the fields of digital identity and personal data protection, where self-sovereignty emphasizes the importance of individuals having control over their own digital identities and personal information without dependency on external authorities or systems.

Therefore, we define a "Self-Sovereign Rollup" as a rollup where the user not only has full control over their interpretation of the rollup state, but does this without having to create a new identity controlled by the rollup, nor without any need to give up custody of its assets to the rollup. They remain in full control and no DAO, developer, sequencer or any other player can take that from them.

Background

The trend of trying to decentralize otherwise centralized systems has been growing since the release of Bitcoin in 2009 targeting finance. This trend has grown not only to cover a full range of decentralized finance (DeFi) applications, but to also cover many new fields such as organizational structures (DAOs), identity (SSI), art (NFTs), infrastructure (DePIN), and more. One of these latest trends is trying to decentralize online games as well, where instead of creating Decentralized Autonomous Organizations, developers are now attempting to create decentralized Autonomous Worlds (AWs).

Developers and players have cited multiple reasons for their excitement in these worlds:

- stronger ownership of game assets (controlled by a user's wallet)

- game permanence (no centralized company in charge of operating servers)

- more player control over the game's direction (DAO-ification)

- easier financialization of game assets (access to open markets)

- composability between games (and other systems)

- easier modability (no private API)

However, tackling a new domain comes with new challenges to solve.

Challenges of scalability

One of the most pressing challenges for games to tackle is scalability. Online games like FF14 peak at over 20,000 daily active players, and so if we want to replicate the same commercial success in autonomous worlds, we need to achieve at least comparable levels of scalability.

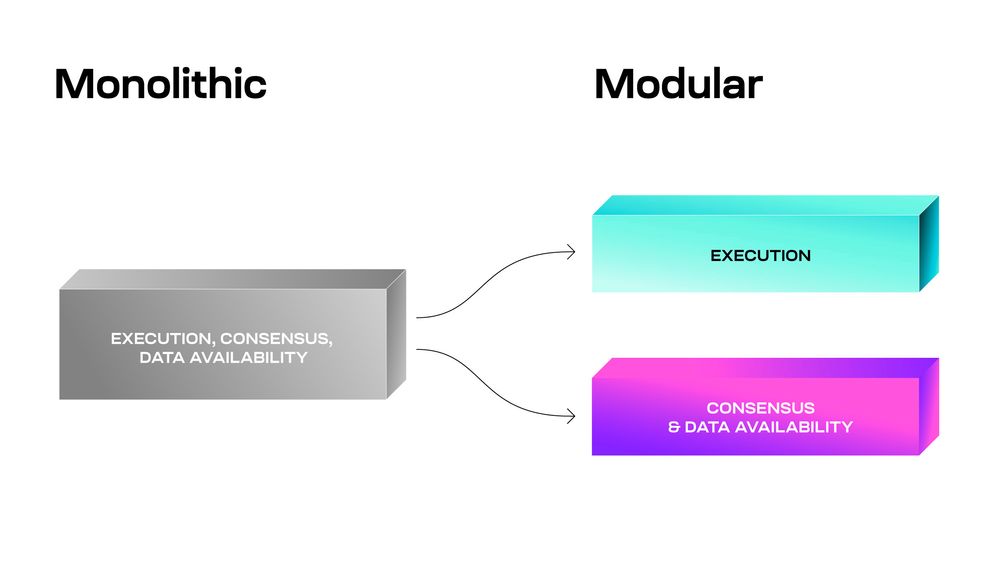

One of the most common ways to approach this goal is to leverage Layer 2 (L2) solutions (i.e. rollups) to solve this problem. Typical monolithic blockchains (ex: Layer 1 chains like the Solana L1) have a single validator set that is in charge of managing every part of the platform, but rollups as a technology allow separating part of the execution to a different set of parties (typically called sequencers)

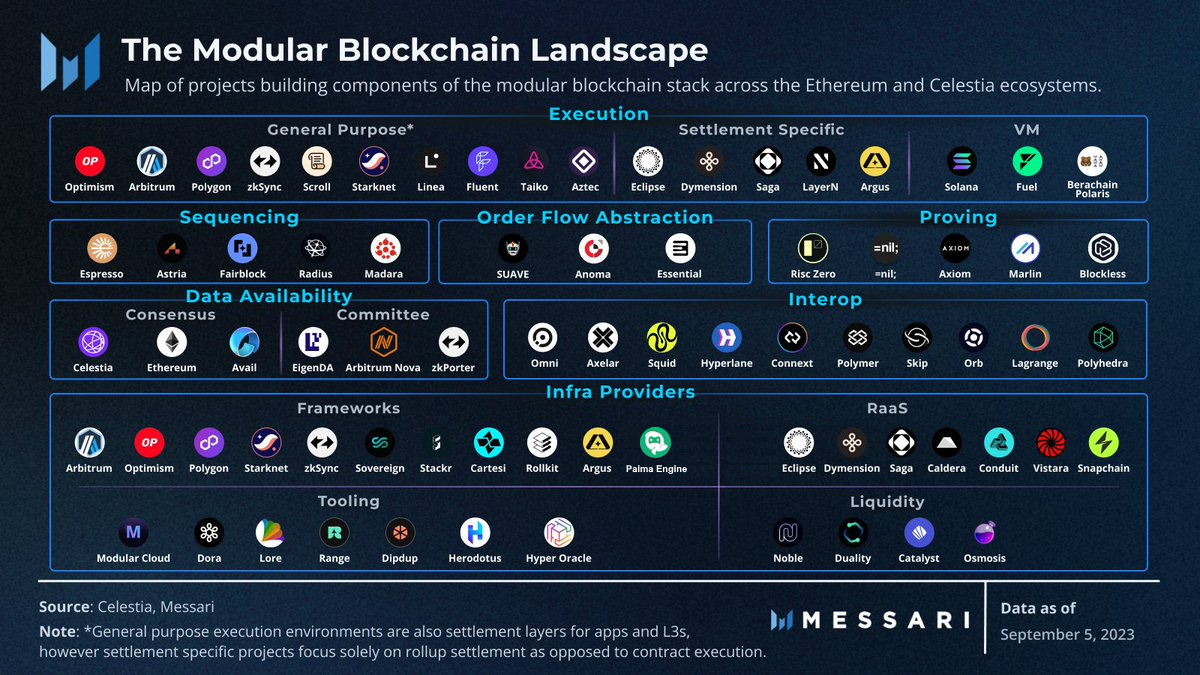

In fact, there are multiple frameworks made precisely for this such as OP Stack and Arbitrum Orbit, so taking this approach is relatively accessible even to developers without deep web3 experience. However, these stacks were not developed for games - rather, they were designed for financial use-cases.

Why doesn't using a rollup stack optimize for financial use-cases work well for gaming? Let's explore some of the implications:

- Harder user onboarding: for a user to start using your application, they now typically have to bridge funds over to your game's rollup, interact with your game, then return any funds to the underlying chain when they're done. For a financial use-case, users may be willing to go through the trouble (since they're interacting with the platform with the intent to make money), but such is often not the case for gaming (especially if the user is not a crypto-native).

- Loss of composability: by building your game on a separate layer, you lose composition with applications on the underlying L1. This means your game loses access to NFT marketplaces, decentralized exchanges (DEXs), oracles and all other applications you may want to compose with. Some teams go as far as spending a lot of business-development or engineering effort porting applications to their game-specific rollup, but this is usually a massive distraction (their core business was to build a game, not to try and establish a whole new blockchain ecosystem). This is different from financial use-cases where building a financial stack is typically the goal.

- Increased centralization: rollups require some party to execute the new execution layer. Although for financial applications you can argue people have a financial interest to participate & validate, that is much less true for games where, unless you capture the heart of your audience, people are unlikely to bother contributing to the operation of the network. This defacto makes the game developer the sole owner of this L2 and therefore a centralized point of trust which leads to 2 follow-up problems:

- Loss of persistence: if the game company shuts down their sequencer, the game will die with it (the definition of death here depends on the exact technical details of the L2 stack used, but in the worst case it may include not just the inability for users to continue playing the game but a loss of the entire game historical data).

- Increased financial risk: These rollups will typically have an update mechanism for their rollup, and therefore the game developers will most likely end up as the sole owner of the upgrade key allowing them to steal all assets held on the L2 at any time. This problem is even worse for certain types of rollups (optimistic rollups - we will define this later) who are only financially safe if somebody is actively monitoring the sequencer at all times to see if they are being honest.

By comparing this with the list of why people are excited about decentralized games, we can see that building a game-specific rollup actually negates nearly all points that might get people excited about what you are building. There are a few ongoing efforts to mitigate this impact:

- "gaming rollups" such as Redstone and Xai whose goal is to act as a shared gaming rollup between multiple games. These help to a certain extent:

- Users only have to onboard once for all games in the rollup

- Composability between games deployed to the same gaming rollup

- Increase decentralization by having multiple studios and communities pitch in to network operation

- recursive proofs (a type of ZK rollup that we will define later): the concept of ZK proofs is usually to have a succinct proof of the correctness of a statement (if you aren't convinced this is possible, you may be interested in this example). Recursive proofs are a special case of these proofs where you can combine multiple proofs into a single proof. In a blockchain context, this means that proofs of correctness of multiple games can be aggregated together, which lessens the impact of sharing limited computation between projects (you can see our work on this topic here).

Therefore, although these mitigation strategies are sure to continue to play an important role going forward, nevertheless no matter which strategy you pick you still end up with some level of onboarding difficulties, asset fragmentation, composability constraints and decentralization hits. Overcoming these limitations will be one of the key benefits of our construction.

Types of rollups

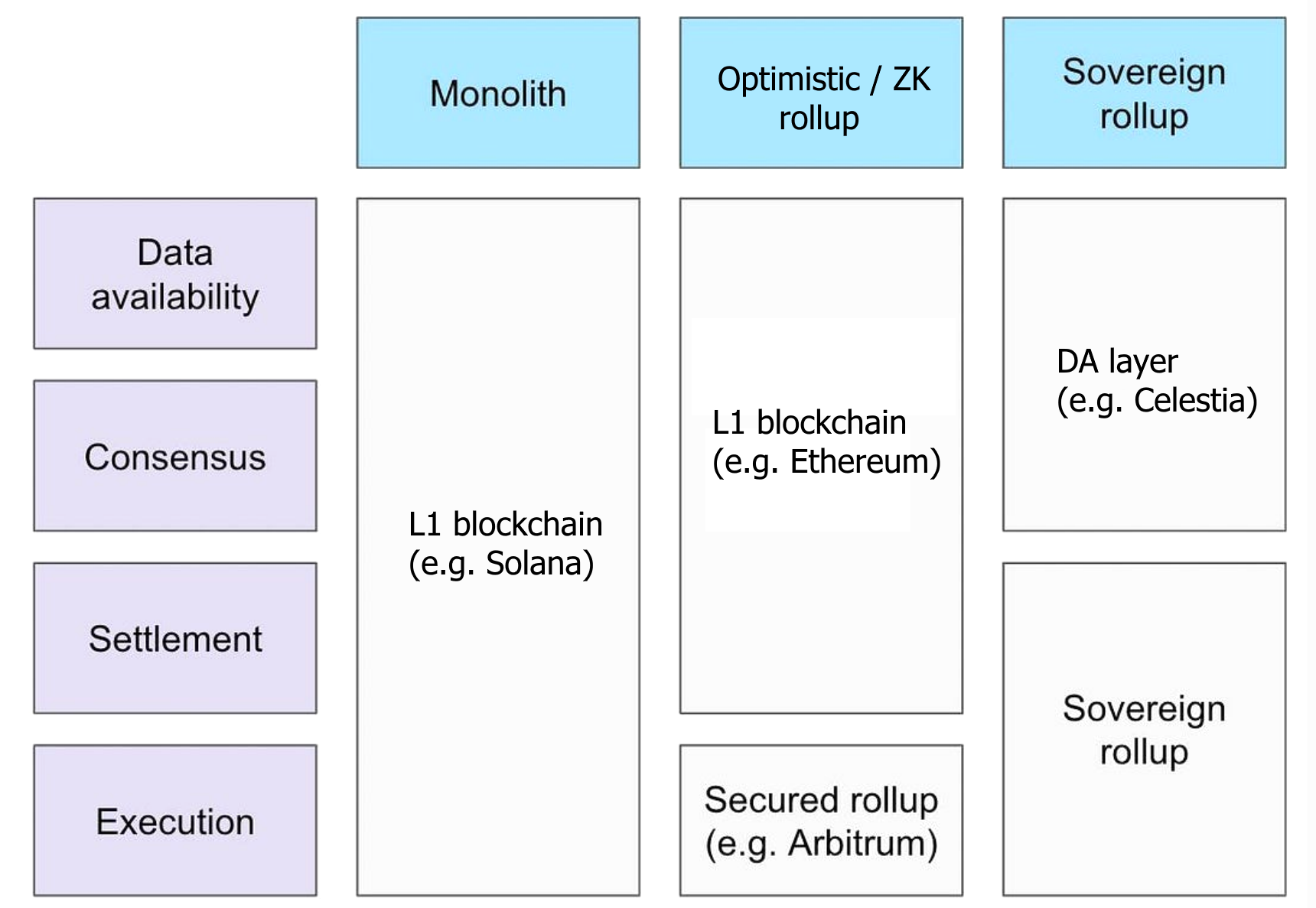

Although rollups perform execution under a different environment than the underlying L1, under the strict definition of the term "rollup" you are required to post all the data required for anybody to be able to redo this computation publicly on the L1 as well.

The difference between different rollup types, therefore, is what data (beyond what is required to redo the computation) that you also post to the L1.

There are 3 popular variants:

-

Sovereign rollup: do not post anything other than just the minimal amount of data.

Popular examples: Paima Engine, L2s on Celestia, most infra for Bitcoin

-

ZK rollups: additionally post a ZK proof of the result. As an additional constraint, the ZK proof must be verifiable on the L1 virtual machine.

Popular examples: ZK Sync, Zeko, Starknet, Polygon zkEVM

-

Optimistic rollups: additionally post your assertion of the result of the calculation. As an additional constraint, if somebody believes the result you asserted and posted to the L1 is incorrect, they must be able to run the calculation using the data provided directly in the L1 virtual machine to check if your assertion matches the computed value (this is called executing a "fraud proof").

Popular examples: Arbitrum, Optimism

Note: settlement refers to who decides which transactions are valid

Note: settlement refers to who decides which transactions are valid

Important note on ZK usage in rollups

The popular terminology here is very misleading. It makes it sound like if you believe ZK is the future that you must support ZK rollups and other rollup solutions are inferior. However, in fact, all rollup types can use ZK cryptography for their implementation (and in fact, multiple do!). There is a certain movement to try and rename ZK rollup to validity rollup to avoid this problem, but renaming things is always difficult.

Here are some examples:

- Optimistic rollup: OP Stack ZK proof initiative (source). Optimistic rollup concepts can help reduce the cost of ZK solutions by only requiring expensive proofs to be generated and posted to the L1 if somebody suspects fraud.

- Sovereign rollup: generating ZK proofs of rollup state that are simply not validated on the L1 by default (example). ZK proofs can still be useful here to build efficient light clients, minimize data overhead by using ZK for compression, and can still allow for proving subsets of application state and many more benefits. These are still available without submitting ZK proofs of the entire rollup state to a canonical contract like you would do in a full ZK rollup.

Flexibility of sovereign rollups

As you can see, sovereign rollups are the most flexible, as they have no additional constraints (no need to build a system that the underlying L1 can understand - you can build something totally custom).

This is a powerful concept - especially for games - for the following three reasons:

1. VM flexibility: no restrictions on the virtual machine run by the rollup

In a typical rollup, you are restricted to using a system that can be verified by the underlying network. This may sound like it is not an important point (after all, most blockchains support general computation as smart contracts so surely they can execute no matter you throw at them). However, it is not that straightforward.

For non-technical audience, there are a few ways to convince yourself of this complexity:

- If having one virtual machine (ex: your game) run in a different virtual machine (ex: Ethereum) was easy, then compatibility between operating systems would also be easy (ex: have a Windows application run on a Mac), but most people know from experience this is not the case. Unless the developer takes extra care to make their application work on both platforms, at best you have to use slow emulators that only support a subset of features and pray your application works.

- A popular optimistic rollup called Optimism started development in 2019, yet at the time of writing (2024) still has not finished fully implementing the core protocol features to ensure full decentralized verification of their construction on Ethereum. Far from being the exception, this is the norm as (as of writing) not a single popular rollup platform has achieved this goal (source: l2beat.com).

Many efforts are being made to do the engineering just once upfront, and have the result be reusable. This is done by making optimistic or ZK platforms where the virtual machine they use are very common platforms like:

Therefore, it is tempting to think that this will soon be a solved problem (just compile your VM to one of these common architectures and you're done). However, these solutions bring a slew of their own challenges that are even more challenging taking gaming as a use-case in mind:

- Performance is difficult. Some of these solutions may cause a 100x performance degradation (ex: ZK-ifying Machine Learning. source). For financial use-cases there may be enough financial value to throw expensive computers at the problem, but that is often not the case for games.

- Extra engineering may be required. For example, verifying Celestia's cryptographic commitments in a blockchain like Mina is not directly possible as Mina doesn't provide the right cryptography (by design to maintain performance recursive SNARKs). Therefore, to accomplish this, you need to convert Celestia's proof into one that Mina can parse combined with a proof that this new proof (for Mina) is equivalent to the original proof (in Celestia). As new cryptographic constructions are coming out all the time, the need for these equivalence proofs does not seem like it will disappear anytime soon (especially given popular blockchains like Bitcoin, Ethereum, Cardano, etc. are very slow to adopt any new cryptographic standard). Most games simply do not have the budget to spend money on extremely difficult engineering problems such as this.

- Gas costs may be prohibitive. Just because you've created a proof that can be verified in Ethereum, it does not mean it will be cheap to do so. Many projects have died after months of development when during final benchmarking they realize verifying their rollup state in Ethereum will cost thousands of dollars on gas fees alone (let alone any server costs for sequencers to generate the proof). For financial use-cases, there may be enough value that paying these fees is acceptable, but margins on gaming are typically too small to allow for this.

Important: these observations are NOT saying that using ZK for gaming is a dead end. In fact, in a lot of cases it is crucial. Remember that just because you are a sovereign rollup, it does NOT mean you are not leveraging ZK technology (see here).

Not being constrained by the underlying layer for settlement (deciding transaction validity) will play a key role in our construction.

2. Modular composability: easily combine many modular stacks into your solution

Most financial applications only require smart contracts a few hundred lines long at most as they have a very well-defined logic. Games, however, are much more broad in their requirements. Just like how web2 games typically require many teams from different disciplines to get together to be made, so will it be the case for web3.

With the growth of the modular ecosystem in crypto, there are now L2s and modular frameworks that provide many of the features and environments you might need for your game. However, piecing them together is difficult. If you want to properly use the underlying chain do the settlement (i.e. not be a sovereign rollup), you need to make sure that the correctness of all these layers are not only all verifiable on the virtual machine to which your game is deployed to (ex: EVM), but also accessible from the same layer (ex: Arbitrum if you deployed your game to their L2).

If you remove the requirement that every part of every stack has to be validated right away on the same shared environment (i.e. you instead build a sovereign rollup), it's now much easier to juggle all these requirements. It increases the computation complexity of operating a game node (you have more stacks you need to monitor directly instead of assuming all the data will find its way to the chain your game is deployed to), but the simplification is often worth it.

Leveraging multiple layers like this will play a key role in our construction.

3. Easy forkability: forking does not require any onchain transaction

First, a terminology note: although the technical term we're discussing is called a fork, we will refer to this concept elsewhere in this document as modding as this is more thematically in-line with the intention in a gaming context.

Since sovereign rollup behavior is not enforced by a smart contract, forking does not require deploying a new contract - rather, forking is as simple as changing your own personal interpretation of the data. This may sound like an odd concept at first, but note that same is true for Bitcoin as well. You can run a modified version of the Bitcoin codebase on your local machine that gives you a million BTC (and no transaction on the Bitcoin blockchain is required for you to run this modified code on your machine), but of course nobody will consider this fork legitimate. However, things like this have happened before (see Bitcoin Cash, which forked based on disagreement about what should be considered a valid Bitcoin block).

However, for financial use-cases, such forkability is often not desired. It's easier to build an ecosystem of composable financial contracts if developers can get assurance of code immutability where they can visually see and audit the code. That is to say, DeFi developers are willing to give up sovereignty of the system to a blockchain (ex: Ethereum) so that it is not possible to change the interpretation of their system without having to change the interpretation of all of Ethereum itself (unlikely, but it has happened before such as the DAO hack in 2016).

For gaming, however, encouraging forkability of either a subset or of the whole game may be advantageous. It empowers players to openly iterate on the game rules to find the more viral variation of the game. The goal, therefore, should be to maintain forkability where possible while still maintaining composability with the rest of the web3 ecosystem.

A more formal way of defining this free-form forkability is to say that sovereign rollups do not allow 2-way bridging of assets that originated from the L1. For example, if you send ETH from the L1 to a sovereign rollup L2, how would the L1 know when you've sent the ETH back to the L1? It cannot understand the L2 state (and presumably you don't want to use any centralized oracle). However, for assets that originated from the L2, bi-directionality is possible (and in fact, this will be the key goal of our construction).

Difficulty of game composition

The ability to compose different games together (ex: using assets from one game inside another) has been one of the key mechanics people have said excites them about decentralized games. However, even beyond the fact that games being on different chains which makes composing difficult as mentioned before, even being on the same chain does not guarantee easy interoperability.

Say there are two games: A and B

- A was released first and has become a commercial success

- B is coming soon, and to encourage players to come try their game, they decide to give 100 gold to all players who have completed the tutorial of game A.

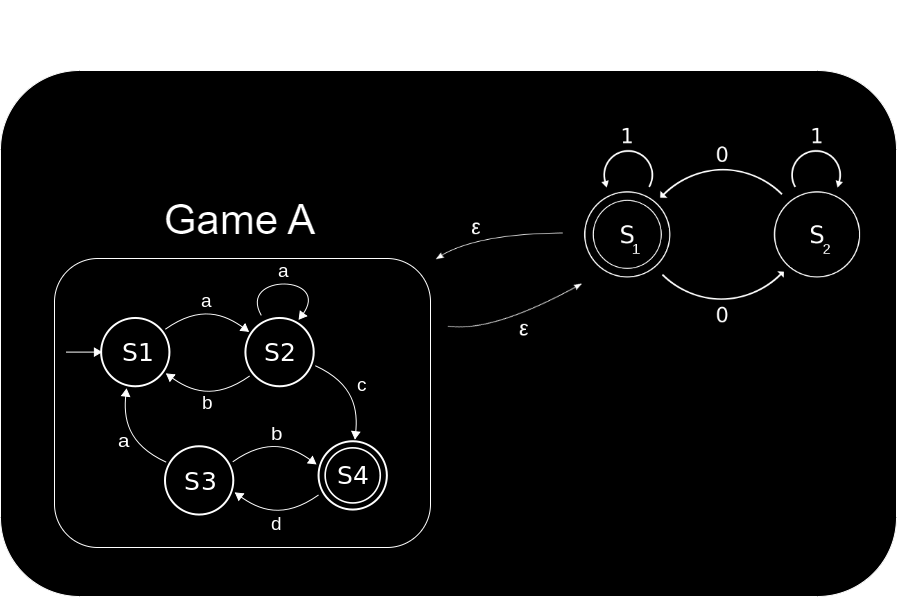

If you visualize these games as state machines, this essentially means you are embedding the logic of A (to know whether or not the user has completed the tutorial) inside game B.

However, what happens if game A updates (either because they simply update frequently, or because they don't like what game B is doing)? This will undoubtedly introduce errors in game B.

Additionally, it's hard to solve this by just making the game immutable. Although this is a viable option for some genre of games, large online games depend on frequent updates to keep players engaged. For example, Fortnite has had 338 patches since its release in 2017 (averaging at one per 7 days), and other online games like Final Fantasy 14 has had 315 patches since its release in 2010 (averaging at one per 15 days).

A common approach to attempt to solve this problem is to separate your application state into a stable component that others can rely on, and a portion that may change during updates. The most natural place to do this would be for the game assets: for example, if your game has a gold ERC20 token, you guarantee this token will not change (but how gold is used in the game may vary per update). However, making gold your stable API implicitly constrains something else: how is gold created? Remember that the gold's circulating supply is decided by the mint function.

To think about why this constrains the minting function for gold, think of your game as a sovereign rollup (anybody can change their interpretation of the game rules as they wish). If a player thinks the game has too high gold emission and that the game economy would be better if it were lowered, they can publish new rules for interpreting the rollup data and try to convince more players to join in on that interpretation. However, if gold is a stable API, we cannot have players start mods with different gold emissions because each mod would require a new gold contract, whose supply and mint are decided by different logic.

In this way, game composition is not only difficult, but it can also drastically lower moddability of your game (even when picking a very minimal surface like just the tokens of your game). However, solving this dilemma will be one of the key benefits of our construction.

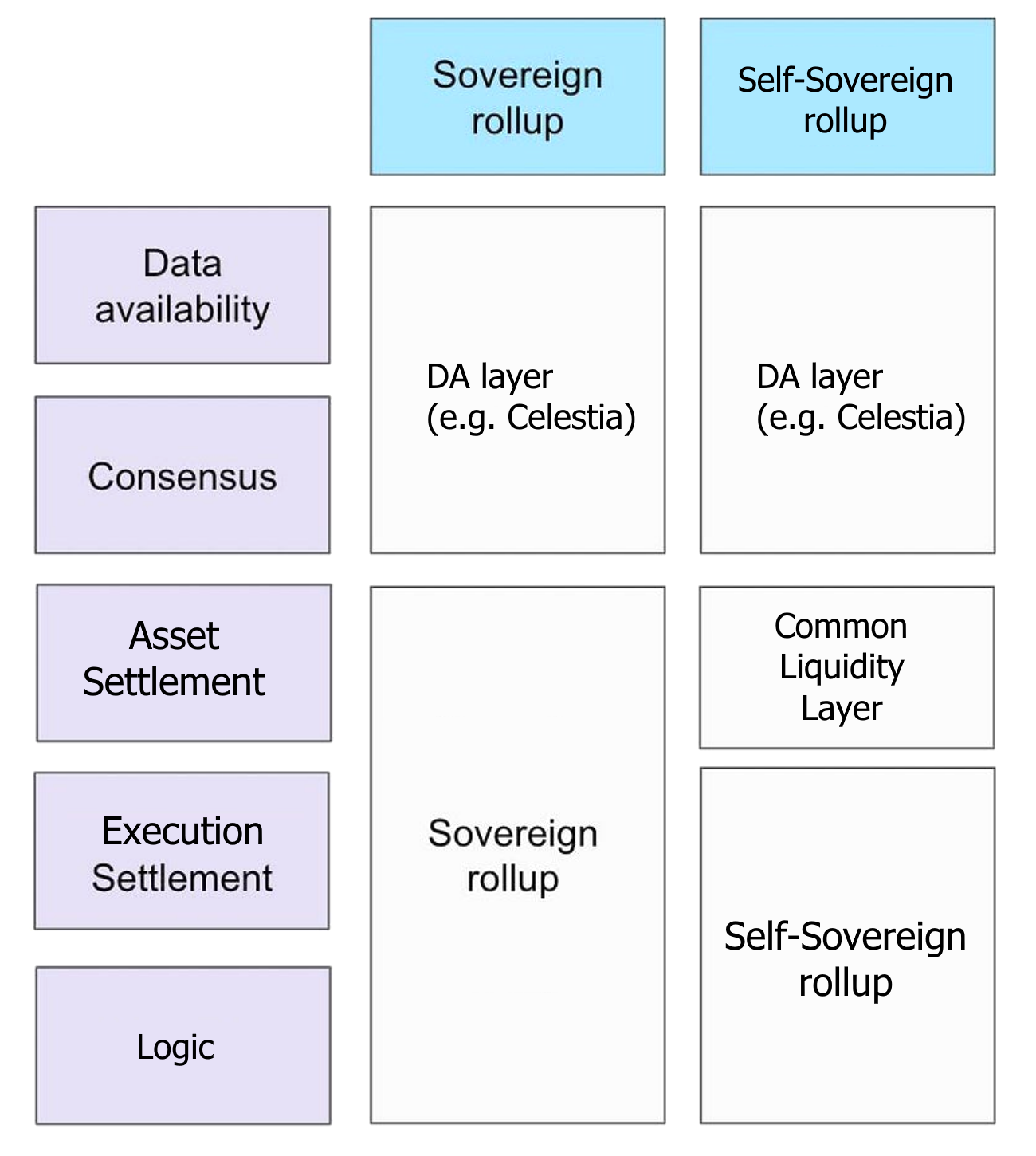

Our construction: self-sovereign rollups

The fragmentation problem is primarily caused because rollup solutions made for DeFi applications bundle asset management as part of execution despite these being separate things (this is a natural consequence of the fact these rollups are typically built re-using systems such as the Ethereum Virtual Machine (EVM) which bundles these 2 concepts at the virtual machine level). Our approach is to instead separate these into two parts: a common liquidity layer (which can be EVM) and an app-specific state machine as the rollup (note: this rollup doesn't have to be a blockchain and can just be a state machine that holds no assets and has no token if desired thanks to the flexibility of sovereign rollups).

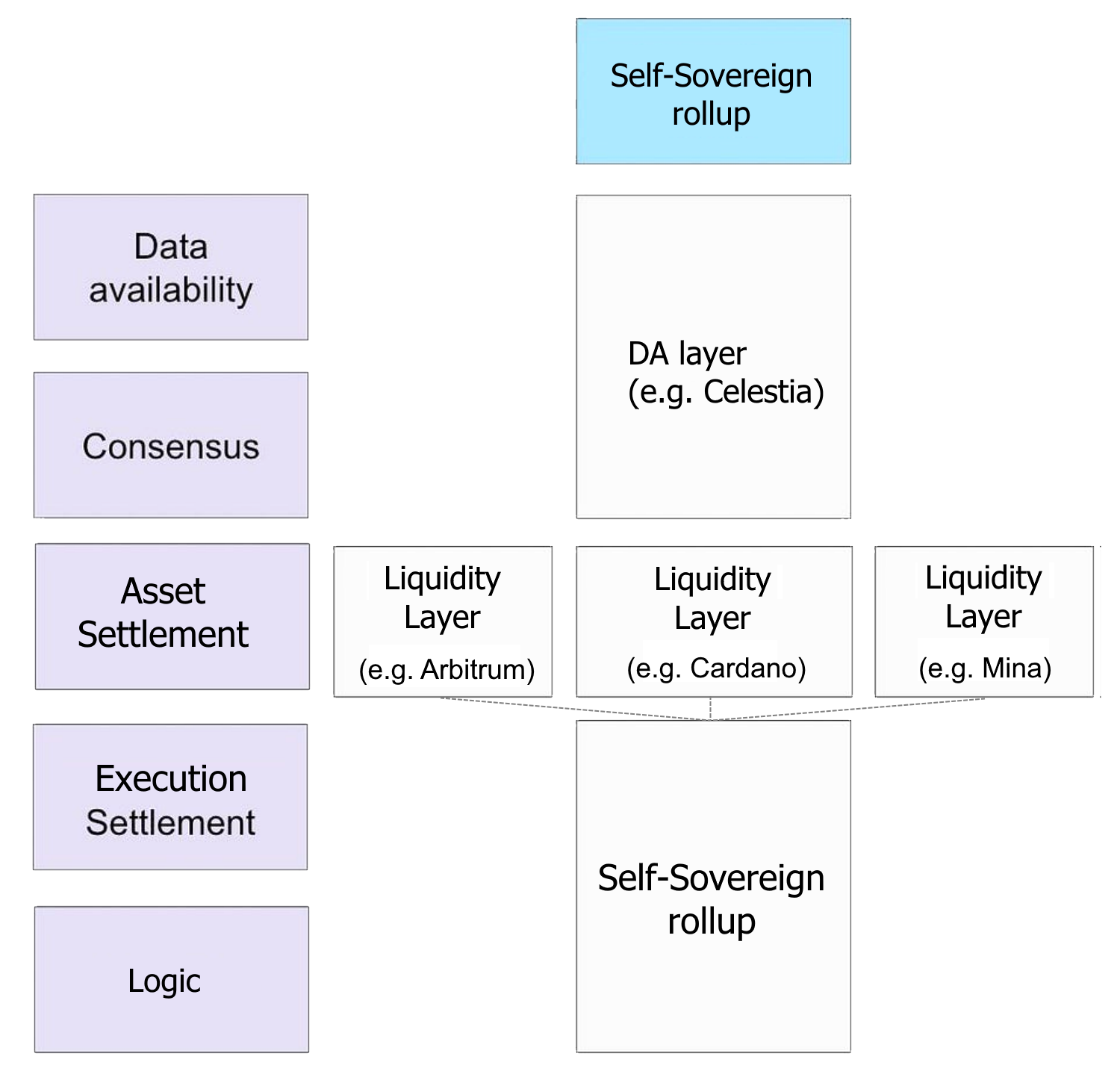

The common liquidity layer can be EVM based (ex: Arbitrum), but it could be another chain as well (ex: Cardano). Paima rollups are app-specific, so it's up to each game to decide what they want to use as the liquidity layer. In fact, Paima Engine supports multiple liquidity layers for applications, such as Tarochi which uses both Arbitrum and Cardano.

Adding new layers like this is fairly easy thanks this property of sovereign rollups, which allows not just combining multiple liquidity layers as needed, but it also allows leveraging different layers for their strengths (ex: Arbitrum for its high TVL, and Mina for its ZK functionality). In fact, given the moddability of sovereign rollups, mods of a game can even introduce new liquidity layers for their application (without forcing other mods to have to do this as well). You can learn more about how these layers are implemented in Paima from an engineering perspective here.

Typically in a sovereign rollup you are projecting data from the underlying blockchain you are monitoring into your rollup state. Although this is an extremely powerful concept (and it's how keep track of assets ownership in the liquidity layers as well as power other primitives supported by Paima), assets born and managed on the L1 are constrained by its rules (there is a canonical, immutable implementation).

In other words, if your game has a gold coin with its contract defined entirely on the L1, then you also naturally need the logic to validate minting of new gold on the L1 as well, which will cause you to run into the problem of composability limiting moddability. To bypass this issue, we introduce a new concept called inverse projections - projections of data that originate from the rollup into the underlying L1.

Inverse projection will be key in unlocking 2 key features:

- Bi-directionality: the ability to move the assets from L2 → L1, as well as L1 → L2

- Moddability: the ability for mods to change anything they want about the assets (ex: add ways to earn gold, remove ways to use gold, add new ways to spend gold, etc.)

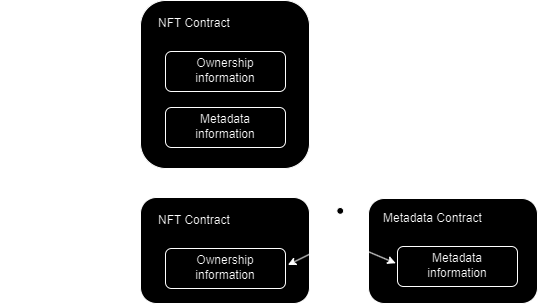

The way we achieve this is by splitting ownership information and metadata information into different contracts. For example, with NFTs (ERC721), contracts have a function to get the owner of an NFT (ownerOf), as well as a function to get the content of the NFT (tokenURI), and these are typically bundled into the same ERC721 contract.

To get the full information, it is up to every user to decide which metadata source they want to leverage. For OpenSea compatibility, games can provide a default source, but Paima will provide an NFT marketplace UI in the future that, unlike OpenSea, will allow users to choose which metadata source they want to use to properly resolve NFT content (ex: image, name, traits). You can learn more about the Solidity code we have created for this in our PRC-3 standard here.

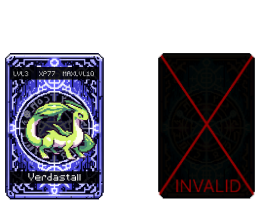

You can see an example of this in the Tarochi Monster NFT collection where NFTs representing monsters from our game rollup Tarochi can be openly traded on Arbitrum. It's possible to mint ERC721 contracts on Arbitrum that are not valid under the default Tarochi rules (ex: catching a monster that doesn't exist in the main version of the game, or catching a monster in a way that doesn't exist in the main game like a custom item). When querying the metadata for these NFTs, users can use a special validity trait to know if this NFT is valid under that fork's set of game rules.

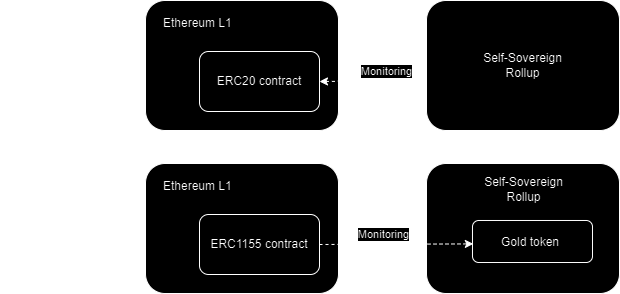

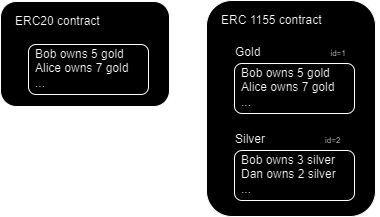

When it comes to fungible tokens (tokens that behave as currencies and not NFTs), ERC20 (the most popular token type for fungible tokens) is not usable in inverse projections because there is no way to assign metadata to a subset of tokens (ex: say these specific 50 tokens are from this game rule, but this different 100 tokens are from this different set of game rules).

Instead, we use ERC1155. ERC1155 is a way to combine multiple fungible tokens into a single contract (ex: instead of having both a gold ERC20 contract and a silver ERC20 contract, you would instead have a single ERC-1155 that contains both a gold asset and a silver asset).

Although ERC-1155 are typically set up to monitor different assets as part of the same contract (ex: gold and silver), we can use the same contract structure to instead track sets of tokens and which game rules they are valid under - similar to what we did for ERC721. This is possible because for an ERC-1155 contract, each asset under its control has a uri function to get the metadata for that fungible token type (ex: metadata for gold, metadata for silver, etc.).

Therefore, we leverage the ERC-1155 concept, except that (just like in ERC721) every new mint gets a new id (you can learn more about the Solidity code behind this construction in the PRC-5 specification here).

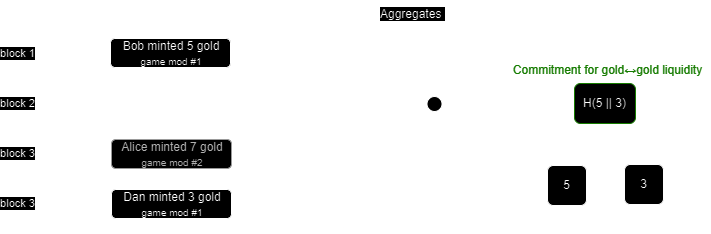

For example, with Tarochi, every time you move Tarochi Gold from the Tarochi rollup to Arbitrum, you will be creating a new id that contains the exact gold amount you moved, and other tools can know if this mint is valid under the game rules they care about by checking that id with the metadata source they are using.

Given that ERC-1155 is supported by popular wallets like MetaMask and OpenSea, this approach allows us to build 2-way bridgeable tokens (you can mint gold on Arbitrum to start the inverse projection, then burn it on Arbitrum to move it back to the rollup). However, this still leaves 2 problems to solve:

- How can we build DEXs that use these inverse ERC-1155 projection tokens?

- More generally, how can we compose this with other games without having to embed the whole state machine of one game into the other (as discussed here) to achieve this?

1. Building a DEX

There are 2 popular kinds of exchanges:

- Order book: a global list of buy & sell orders, with possibly a matching engine that matches overlapping prices. Examples of this include CEXs (ex: Binance), NFT marketplaces (ex: OpenSea), many DEXs using UTXO models (sprkfi, museliswap), and many ZK-powered DEXs (LuminaDEX, Renegade).

- AMM (Automated Market Makers): buy & sell orders are executed directly against liquidity pools which automatically (programmatically) updates the price based on a formula. Examples of this include Uniswap, PancakeSwap and Curve.

AMMs are often used in decentralized blockchain settings because they tend to have lower gas fees (no need for market makers to change their orderbook orders if the price deviates too much, and no need for a matching engine executing orders for users)

However, they have one problem in regards to our inverse projection standard: they are automatic. That is to say, they are not natively aware of the fact there are different modes for the game that may exist, and that certain tokens are only valid against certain rule sets.

Fortunately, there exists a straightforward immediate solution: simple use OpenSea as an orderbook. They support ERC1155 after all. However, this is not a long-term solution:

- OpenSea is not aware of the different game rules that might exist. It can facilitate showing valid and invalid tokens via a validity trait (which means you can do trait-based collection offers), but ideally this would be abstracted away from the user and the user just picks the game rules they want to use from a list.

- OpenSea's fee model is not designed for fungible tokens (they take 2.5% of fees, which is a lot compared to Uniswap's 0.3%)

- Collection offers depend on trusting the OpenSea team

- OpenSea is not deployed to every blockchain

Therefore, users would be better served by a purpose-built DEX that tackles these issues.

Custom DEX: Placing sell orders

Placing sell orders on this DEX is straightforward: users withdraw gold from Tarochi onto Arbitrum (mint a new ERC1155 id), and then create a sell order on the DEX contract.

Custom DEX: Placing buy orders

Buying tokens directly is simple: specify the amount of tokens you want to purchase, and the DEX will fetch all sell orders created sorted by price until you have your order completed (plus some extra sell orders can be included to mimic slippage and avoiding transactions failing if the sell order is filled by somebody else while your transaction is being submitted to the blockchain).

However, the difficulty comes in placing a buy order without buying tokens right away (ex: if you want to buy 1000 tokens for $1 and are willing to wait for the price to reach that point). The difficulty is that you are most likely not interested in buying tokens for every variation of the game (as some may have no value), but rather you want to place an order for a specific variation (or at most a set of variations) of the games you are interested in.

Therefore, when placing a buy order, you are not just providing how many tokens you want to buy, but also specifying under which set of rules you are interested in buying these tokens. If the validity of tokens under a set of game rules can be verified using a ZK proof, then this is not so difficult. However, there is no guarantee for that to be the case.

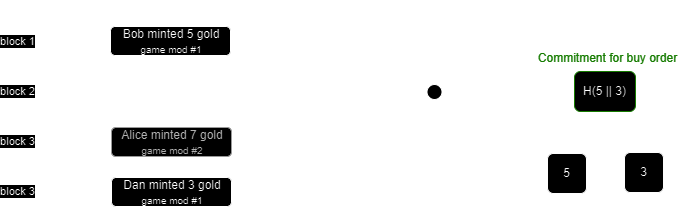

To handle the case where there is no ZK proof of validity, buy orders must (instead of specifying all mints validated by a ZK proof) provide a commitment to a specific set of ERC1155 mints they consider to be valid.

While this protects the buyer from invalid mints, it introduces a new problem: what if somebody wants to fill the buyer's order using new gold that was minted after the buyer placed their order (and therefore not included in the buyer's commitment), but still valid under the same game rules the buyer is interested in? Typically buyers will not want to be running their computer 24/7 to constantly update their orders to introduce the latest mints. To solve this, we introduce a staking functionality.

Custom DEX: Facilitating filling of old orders by providing liquidity to game rules

The problem of buyers not being able to be matched against new mints created after their order was placed can be solved by creating a gold ↔ gold trading pair to facilitate trading new gold under a set of rules with old gold under the same set of rules.

Conceptually, this is similar to what buyers do. However, unlike buyers who are buying with different assets (ex: trading gold for ETH), stakers are providing liquidity on a gold ↔ gold pair (always at a 1:1 rate).

Since the game can monitor activities on the common liquidity layer (using projections in general as discussed here), games can actively reward liquidity providers with new gold in-game for providing liquidity on this pair. This acts as a reward for the staker for timely updates of their commitment to ensure newly minted gold can always quickly be transferred into old gold as needed to facilitate trading.

Note that a natural consequence of this is that the resulting staker set forms what resembles a Proof of Stake system. That is to say, you can see onchain the list of all the stakers and which ones have overlapping commitments to know which set of game rules they are providing liquidity for. These stakers earn yield on both tx fees of facilitating gold ↔ gold trades as well as any gold inflation in the game for their service.

This naturally creates a social consensus on the state of the game via the stakers, but it does it in such a way that even if all the stakers collude to set an invalid commitment for the pair they're providing liquidity to, nobody loses any money. User are kept safe as they can easily check if the staker is trading gold under the same game rules they are interested in. Therefore, you can leverage the benefits of Proof of Stake without many of the downsides. Notably,

- Without 51% attacks: nobody can force you to swap with their trading pair.

- Without censorship: stakers do not decide which blocks are valid in the network (they are not sequencers or block producers).

Rather, they are simply asserting which set of assets they believe form the most interesting set of game rules.

Additionally, these stakers are financially incentivized to always be monitoring the most popular variation of the game for two reasons:

- If the game provides them gold rewards for their service, they're financially incentivized to provide liquidity to the pair that will give them the highest rewards (and presumably the most popular version of the game is the one where gold has the highest price on the open market)

- Sellers pay a transaction fee when they use the staker's service to swap gold to facilitate filling a buy order. Therefore, stakers are incentivized to monitor the version of the game rules where there are the most sellers

However, just because there are incentives to do something, it doesn't mean users are forced into it. Anybody can create a new mod for the game with new rules and start providing liquidity themselves as they start attracting new players. Even if a mod for the community always has a low user base, a small dedicated community can still enjoy the full infrastructure to trade everything from NFTs to fungible tokens on the open market.

2. Composing with other games

The consensus formed around game rules naturally by the liquidity provider set for the DEX can also help facilitate composition with other games. If game B wants to reward players of game A, it no longer has to actively monitor game A's development and always be sure to be using the most up-to-date version of its state machine. Instead, it can actively monitor which game rules have the largest liquidity provided for it (possibly weighted by other metrics such as the price of the token for this rule set on the open market).

In this way, games can start forming a web of composition between various titles that dynamically ebb and flow as each game updates over time based on new innovative game rules that emerge naturally from players.

Final note: the Paima Ecosystem Token

Self-Sovereign Rollups provide a very powerful mechanism that empowers players to control the direction of the game. Although any player or developer can run a game themselves (whether it be it a popular variation or the new mod they've been working on), realistically many will want to outsource this to a common infrastructure layer.

The Paima Ecosystem Token will naturally play the role of running shared sequencers and infrastructure for the Paima Ecosystem, for example, by running shared sequencers for appchains built using Paima's ZK layer.

We will have more information about the ecosystem token coming soon, so be sure to follow the Paima team on Twitter and other platforms.

Conclusion

Self-sovereignty bestows upon each participant the power to interpret the state of a game themselves, free from any logic enforced on them by the developers or sequencers. This autonomy ushers in an era where the game world is cultivated not through majority rule or game developer decrees, but through organic communities fostered around shared interpretations of rollup state.

This freedom enables users to organically converge on the most engaging and enjoyable game mechanics over time, akin to a natural selection process in evolution, yet with the potential for diverse paths. It allows participants to experiment with and select the features that best enhance their experience, fostering a dynamic and evolutionary development of game mechanics driven by user preference and creativity.

Employing common asset contracts across diverse game rule facilitates the free exchange of assets among different interpretations of the game state. Even for assets that do not have a common interpretation between a given pair of worlds, players venturing across these worlds can trade assets through players who track & see value in multiple variations of the game. Unlike bridges, trusting these players is not required as you are not temporarily entrusting your assets with them, but rather facilitating the conversion of your assets between these worlds (whose states both can be verified locally on your machine).

Through this mechanism, composability of assets (not just between variations of the same world, but even between entirely different games) is possible without the onus of games to decipher each other’s intricate state mechanisms. This interoperability ushers in a fluid commerce of assets, navigating through the most liquid channels of trade, thereby engendering a vibrant tapestry of gaming ecosystems where diversity and the agency of the player reign supreme.

If this excites you, now is a great time to get involved in the Paima Ecosystem and help build the future of decentralized gaming. Additionally, if you haven't explored Tarochi yet, you can do so below: